Intuitions

1. We learn from both positive and negative feedback.

2. This motivates the famous reinforcement learning (RL) in robotics.

3. In RL, we can give both positive and negative reward and the objective is to find an optimal policy function which maximizes the expected sum of reward.

What I am trying to do is

1. I assume daily-life scenarios.

2. What I mean by that is, a) robot acts, b) human interacts by giving positive or negative response. c) robot updates, and repeat.

3. This scenario closely resembles the RL as the response can be thought as the reward.

4. However, RL often requires a dynamic model and otherwise a bunch of data are needed to find an optimal policy function.

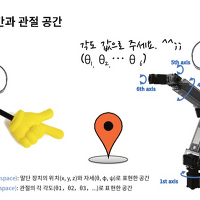

5. We cast the policy optimization as a supervised learning problem using regrefication finding a function which maps a state space to an action space.

Frankly, I still cannot convince myself why regrefication is so useful compared to other RL methods.

* Some benefits of regrefication

1. Supervised learning methods do not require a dynamic model. (Nor does RL..)

2. It directly optimizes a function which satisfies certain conditions (close to positive, far from negative).

3. It is a new framework and it's fun!

Scenario

Let's assume that you are given a task to make a social robot that can navigate properly and socially in a dynamic environment with pedestrians. At first, a robot is trained with minimal information, and thus, it won't work satisfactorily with respect to social criterion. However, this robot can interact with humans with binary signals, indicating good and bad and should evolve gradually by the help of humans' responses.

Then, how can we not get fired?

Above figure illustrates interactions between humans and a robot.

Perhaps, we can tackle this problem in two ways.

1. Find policy, directly.

2. Find cost map first and then find the policy which minimizes it.

*Regrefication

Recently, I made an interesting regression framework, which I refer to as a regrefication. This bizarre looking work is a combination of regression and classification. Following is the formulation for regrefication using sequential quadratic programming. Since the hessian of the loss function is indefinite, Newton-KKT Interior-Point Method is used to solve indefinite QP (http://www.montefiore.ulg.ac.be/~absil/Publi/indefQP.htm).

I also made a video cilp illustrating the concept of regrefication and below is the youtube video.

We can clearly see that the resulting function tries to be close to positive data (blue circles) while being far from negative data (red crosses),

'Enginius > Robotics' 카테고리의 다른 글

| ROS Manipulation with Dynamixel (0) | 2016.01.11 |

|---|---|

| Reviews that I got from ICRA and IROS (0) | 2015.11.02 |

| Theoretical Analysis of Behavior Cloning (0) | 2015.10.10 |

| Robotics paper list (0) | 2015.10.06 |

| 눈이 올 때, 교통 사고를 줄여보자! (0) | 2015.08.21 |